Everyone working in technology, digital rights, and Internet Governance can attest to how fast-moving the space is. This is because technology moves so fast, now is the time to talk about how that will impact our communities and countries. You will be left behind if you aren’t already having this conversation.

Many governments are paying a lot of attention to technology and emerging technology and seeing how they can use it, particularly surveillance technology, digital ID systems, and artificial intelligence.

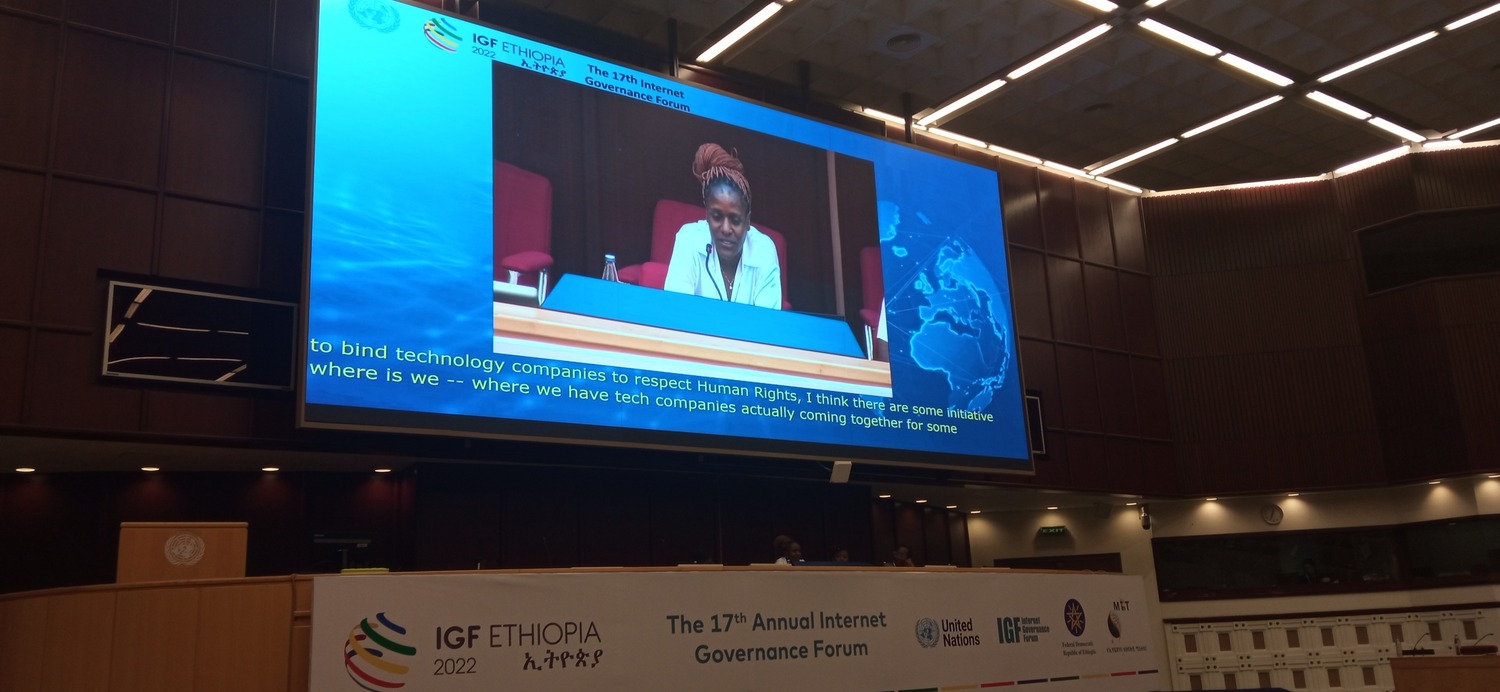

“When talking about emerging technology, more often than not, even now, the onus of making it perfect is on individuals, not how it should be. We need to shift the onus to do better regarding technology and its intersection with rights,” Smita V of the Association of Progressive Communications (APC) said during the Global IGF round table ‘Who’s Watching the Machines?’

This sentiment aligns with KICTANet’s mission to catalyse ICT policy reforms in Africa through advocacy, capacity building, research, and stakeholder engagement.

Algorithm Vs. Women In Politics

KICTANet Trustee, Liz Orembo, sitting on this panel, shared her experience in their work at KICTANet in training women in leadership on digital security. Liz and her team realized that one thing that is discouraging women in Kenya from seeking political seats is online gender based violence, sometimes manifesting in forms of data breaches and misinformation and amplified by social media algorithms which are designed to get people to interact with content regardless of who that content harms.

Women politicians in Kenya face frequent attacks online, and social media amplifies these posts. The business and advertising models of social media create algorithms that boost what people engage in most, and it just so happens that one of these is the abuse of women online.

Seeing how amplified online abuse is, discourages more women leaders from running for office.

“So what we are trying to seek is a change of this. Discourage such business models to ensure there is no harm especially to a minority population that is actually disadvantaged.” Liz said.

Imported Surveillance: Who Owns the Data?

Speaking about her experience in Southern and Eastern Africa, Chennai Chair from Mozilla mentioned her realization of increased potential bias based on the increased importation of technology specifically for surveillance cameras. She spoke of how surveillance helps increase state and personal security, asking the questions, ‘who owns the data?’ and ‘Where is this technology being procured? ‘

“The concern with this new tech is transparency because it seems as if we are getting technology in our regions to train the technology to recognize black faces. Then it is exported back for someone who’s like, great, it works better and doesn’t deal with bias,” She warned.

There is more surveillance as people navigate digital spaces. For women, surveillance has always been a control tool, particularly from a patriarchal perspective. If you don’t fit in the box, there’s increased scrutiny of what you are doing. The consequences of this sometimes manifest as shadowbanning non-conforming social media accounts.

Access to New Tech Along Gender Lines

Minority genders and women in disadvantaged communities may sometimes lack access to new technologies.

Liz Orembo mentioned that some hindrances include affordability or societal norms. This access gender gap creates invisibility in the data collected from the use of technology, and in effect creating biases and greater inequalities as they employ machine learning and other automated decision-making techniques to tailor user experiences.

“The thing about artificial technologies is that the more they learn your preferences and the more you work with them, they kind of adjust themselves to your patterns of usage. But the gender data gap emanating from challenges of access and use causes a cyclic pattern of exclusion where women are driven out of digital spaces because of decision bias.” Liz said.

Rage Against the Machines

Sheena Magenya of APC noted that even though some of the usage of tech is voluntary, during the COVID-19 restrictions, there wasn’t much room to refuse the use. People worldwide had to fill out digital documents that tracked their movements.

Think of the implications of this lack of choice for communities whose identities are criminalized, for example, if you identify as an LGBTQI person. This is some of the information that was required. It is not farfetched to conclude that many had to lie about their gender identity or sexual orientation to avoid trouble.

“There is something about how technology is forced on communities without understanding the complexity and nuances of our lived experiences that is quite problematic. And therefore, you could argue that some people choosing not to help the machines learn is an act of resistance,” Sheena said.

This resistance against helping the machines is further justified as the effects of cooperation will be long-term. For example, disclosing certain health conditions or disabilities often leads to discrimination, such as denial of health insurance.

Perhaps Your Rage Is Pointless

Srinidhi Raghavan of Rising Flame spoke on the Indian context of unrolling the Universal Health ID. She examined the consequences of streamlining machines to automatically detect disability using the standard ways of understanding how disability presents itself.

“What this means in terms of what we disclose and what we don’t is very pertinent when it comes to disability. A lot of emerging technologies and AI that are there right now are meant to be able to find parallels or ‘detect’ disability.” Said Srinidhi.

With the widespread discrimination against disability, automatically detecting disability will have harmful impacts.

A lot of harm will be avoided when we start having minority representations in big tech spaces. As I said in the forum, include marginalized voices in the design phase so that when you roll out a product, it works for everyone.

![]()